Generative AI tools are starting to revolutionize how many industries engage with regulatory obligations. Notwithstanding the known limitations of current models, many companies and government entities have made the decision to integrate AI platforms into their processes in order to cut costs and improve efficiency.

Adopters of these tools allege that they can gather background information, draft outlines, and spot gaps better or more efficiently than unassisted humans. Yet the very speed and fluency that make these systems attractive can also mask serious risks, especially when an unverified draft migrates into a regulated submission or scientific manuscript.

Over the past two years a series of public missteps has underscored the dangers of relying on AI output without rigorous human review:

These examples matter for medical and regulatory writers because they illustrate a central truth: Large language models can imitate scientific tone but do not guarantee scientific truth. Citations, statistics, and even study designs may be "hallucinated,", and any error that slips into an Investigational New Drug (IND) or Clinical Evaluation Report (CER) could derail approval timelines or, worse, patient safety.

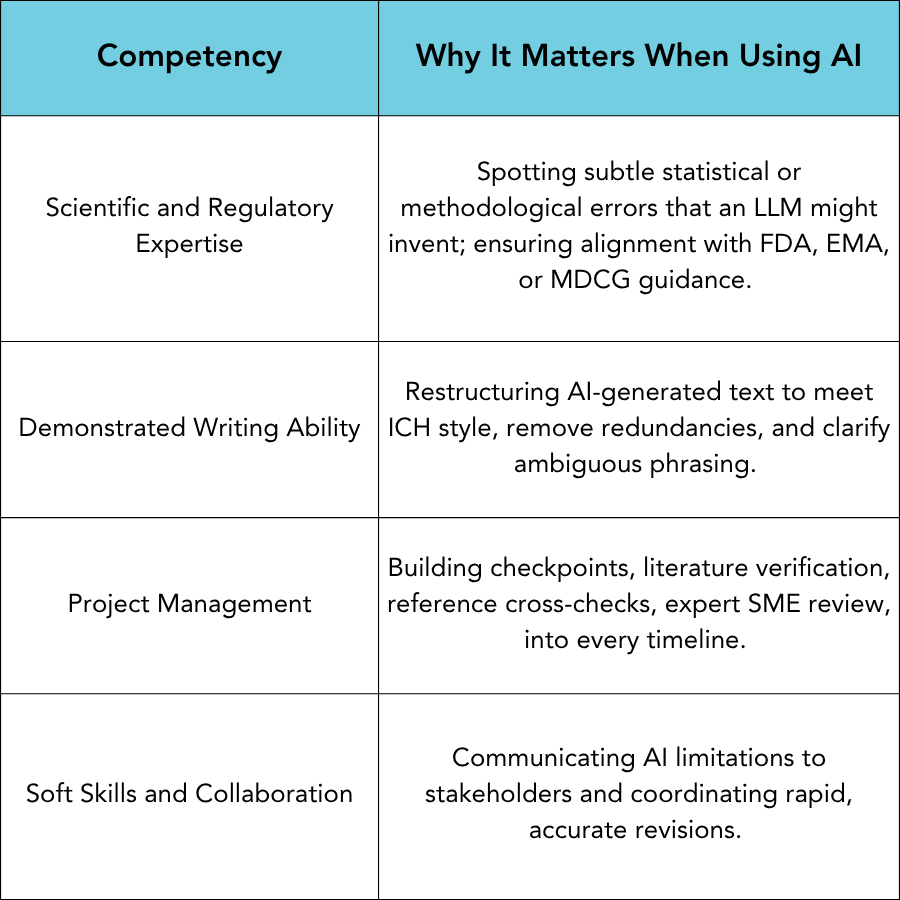

Technologists often frame AI as a replacement for writers. At GLOBAL, we view it as a potential force multiplier, but only in the hands of experienced professionals who can be trusted to audit and refine its output. Furthermore, we believe that AI should never be used without full disclosure, a description of methods, and a justification and ensuring that all appropriate safeguards exist to protect proprietary data. The core competencies outlined in our earlier article on "Hiring the Right Medical Writer" become even more critical in the AI era:

In today’s environment, almost anyone can use AI to create text that sounds knowledgeable. Unfortunately, that surface-level polish can give a false sense of expertise. An organization with limited regulatory experience can appear credible simply by prompting a generative tool and presenting the results as their own. This is particularly dangerous in regulated industries, where a misstatement or misinterpretation can delay approvals, damage reputations, or jeopardize patient safety.

The most serious risk, however, is that incorrect or fabricated information may slip past internal reviewers and make its way into a submission, similar to what we have already seen in other contexts. When that happens, the consequences can be severe: regulatory delays, public retractions, loss of credibility with authorities, and even harm to patients. With AI-generated content, the margin for error becomes thinner.

If you are searching for a medical writing vendor, ask how they use AI, whether they disclose it, and what checks are in place to ensure quality and compliance. A trusted regulatory writing firm will not only be transparent but will also demonstrate a structured review and validation process behind every deliverable.

Our team of experienced regulatory writers and consultants is here to provide the support you need. Whether it’s regulatory submissions, project management, or individualized training we deliver high-quality, compliant content to keep your projects on track. Let us be your trusted partner—ensuring clarity, precision, and expertise in every deliverable.

Contact us today to learn how our writing and consulting services can support your team!

The 510(k) pathway may be well established, but success depends on precision and clarity. With deep experience across medical device categories and FDA submission types, our team at GLOBAL helps you bring safe, effective technologies to patients—faster and with confidence.

The In Vitro Diagnostic Regulation (IVDR) (EU 2017/746) is the European Union’s regulatory framework governing in vitro diagnostic medical devices—tests and instruments used to examine human samples like blood or tissue. For manufacturers, understanding the IVDR is essential for maintaining or gaining access to the EU market.

For drug developers, FDA approval is a long and complex journey. Two of the most important milestones on this path are the Investigational New Drug (IND) application and the New Drug Application (NDA). While both are critical submissions, they serve very different purposes.